How to get PowerShell transcript logs from client machines remotely in Azure File Blobs

Any Intune administrator or sysadmin knows how important the Powershell scripting functionality is for achieving a vast array of powerful tasks across your IT infrastructure.

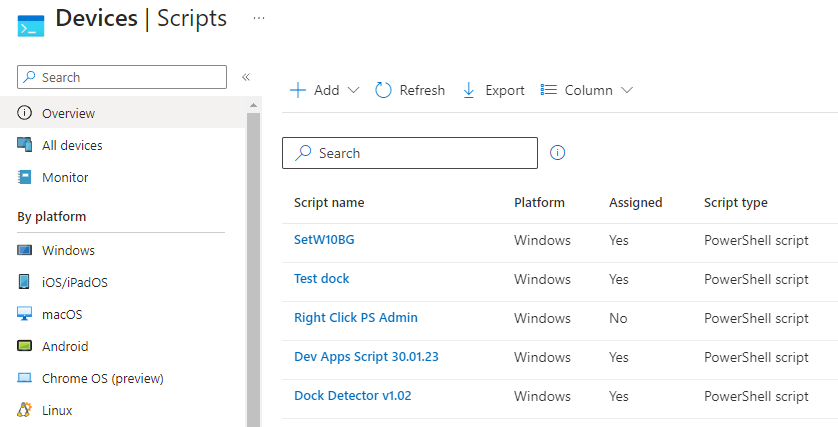

Intune Powershell Scripting Interface

From setting up company VPNs to more complicated bespoke jobs, having an insight of these scripts when they execute on the target machines is quite limited natively in Intune and has been for some time.

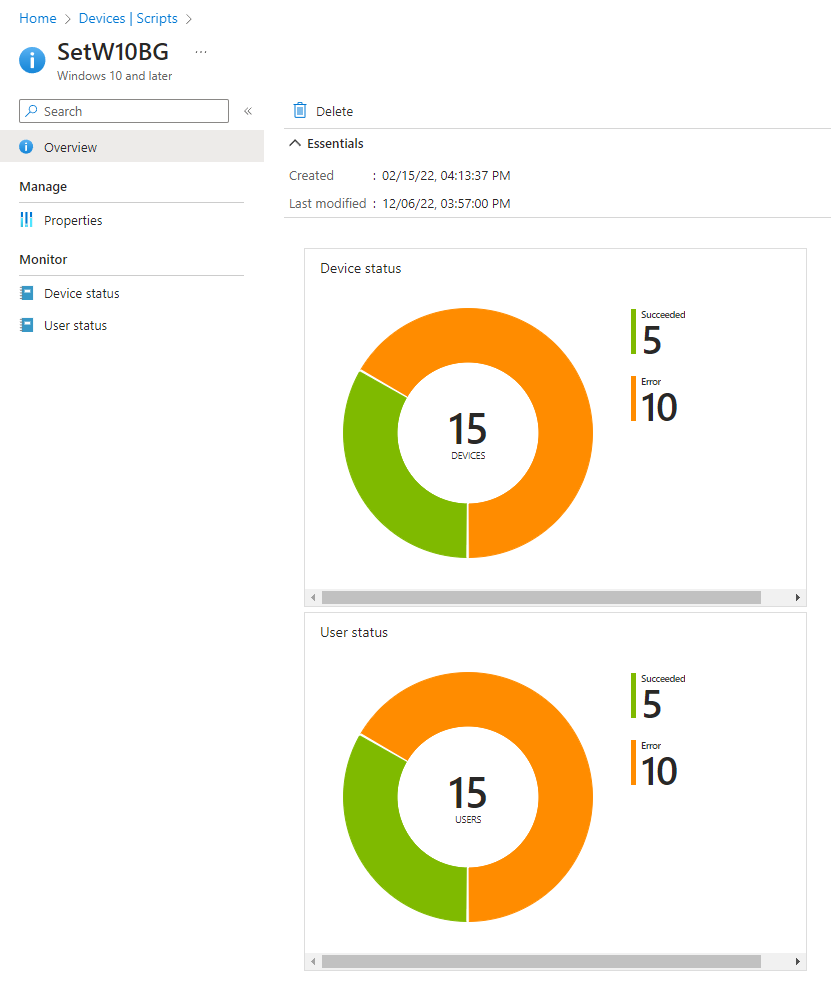

Device success/fail breakdown chart

Once a script is assigned via device or user assignment, the script will eventually be pulled down via the Intune Management Extension and executed on the client machine.

As depicted in the image above, The Intune portal gives the user a pie chart style summary of the script results showing a success and a fail rate.

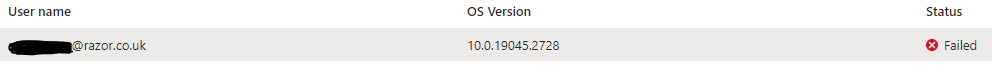

If you drill into the device or user status you can click on machine entry to see a little bit more information as below:

Not very helpful… your script failed on the client but wouldn’t it be useful to know ‘why?’

You may have tested this script successfully on your personal computer or a virtual machine in the cloud however this doesn’t help when you are deploying to a number of different machines that could be across various departments or locations.

These machines could:

- Have different OS and applications versions

- Out of date Powershell

- Permissions or Script Execution issues

Wouldn’t it be useful to just see the logs from that machine straight away from the comfort of your own workstation without interacting with that user or their machine potentially disrupting them during their day-to-day?

Enter the power of Powershell transcript logging and Azure File Blob Storage! 💪

With the help of some commands built into Microsoft’s Az.Storage Powershell module, it’s quite easy to upload the transcript of the script during the end of script execution to a place you can access instantly such as Azure Blob storage, or other kinds of remote file storage.

The basics steps to achieve this are as follows:

- Ensure end devices have the latest version of the

AzPowershell modules are installed on them as interacting with Azure Blob Storage requires the Az.Storage in particular - To make things a tad easier you can use my own Powershell module -

BlobTranscriptwhich is just a basic function wrapper for PS to communicate with Azure storage blobs - Create a suitable blob file storage in your Azure tenant and create a SAS key for use in the Intune scripts

- Amend your Powershell scripts to include the following:

- Start and stop transcript - to create a local log

- Function to upload the transcript file to the blob storage

Deploy the required Powershell modules to your endpoints

Before you can take advantage of enhanced logging, the client machines will require a few Powershell modules installing.

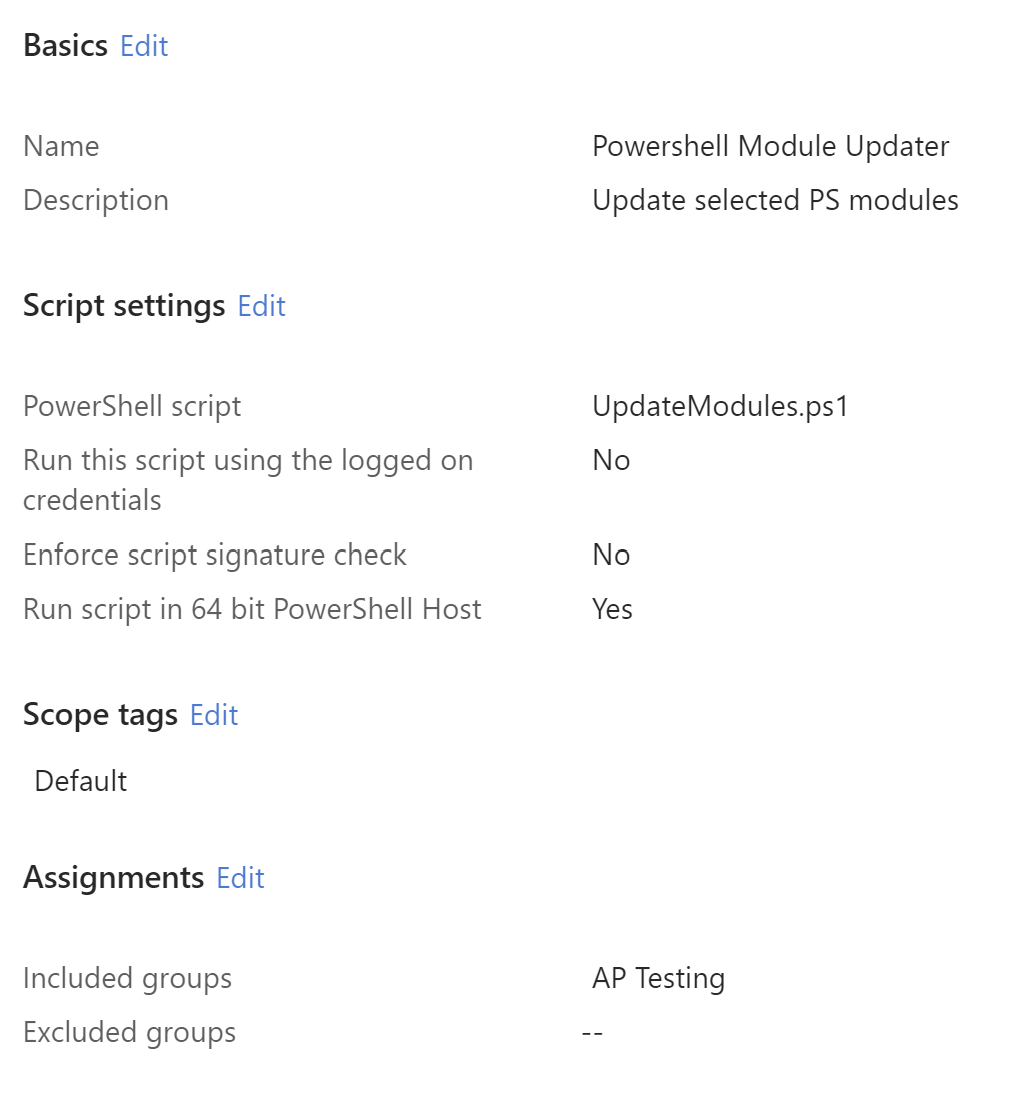

Create a new PS script and assign to your desired groups

This is the script from my previous article: Powershell Module Updating

This function will not just try to install the modules but check for older versions and appropriately update them including error handling for modules that were not originally installed via Install-Module which I found was quite common during testing.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

# PS Modules to install/update - List your modules here:

$modules = @(

'PowershellGet'

'BlobTranscript'

'Az.Storage'

)

function Update-Modules([string[]]$modules) {

#Loop through each module in the list

foreach ($module in $modules) {

# Check if the module exists

if (Get-Module -Name $module -ListAvailable) {

# Get the version of the currently installed module

$installedVersionV = (Get-Module -ListAvailable $module) | Sort-Object Version -Descending | Select-Object Version -First 1

# Convert version to string

$stringver = $installedVersionV | Select-Object @{n = 'Version'; e = { $_.Version -as [string] } }

$installedVersionS = $stringver | Select-Object Version -ExpandProperty Version

Write-Host "Current version $module[$installedVersionS]"

$installedVersionString = $installedVersionS.ToString()

# Get the version of the latest available module from gallery

$latestVersion = (Find-Module -Name $module).Version

# Compare the version numbers

if ($installedVersionString -lt $latestVersion) {

# Update the module

Write-Host "Found latest version $module [$latestVersion], updating.."

# Attempt to update module via Update-Module

try {

Update-Module -Name $module -Force -ErrorAction Stop -Scope AllUsers

Write-Host "Updated $module to [$latestVersion]"

}

# Catch error and force install newer module

catch {

Write-Host $_

Write-Host "Force installing newer module"

Install-Module -Name $module -Force -Scope AllUsers

Write-Host "Updated $module to [$latestVersion]"

}

}

else {

# Already latest installed

Write-Host "Latest version already installed"

}

}

else {

# Install the module if it doesn't exist

Write-Host "Module not found, installing $module[$latestVersion].."

Install-Module -Name $module -Repository PSGallery -Force -AllowClobber -Scope AllUsers

}

}

}

Update-Modules($modules)

Az.Storage is just a sub-module of the primary Az Azure collection but it’s the only one required to manipulate blob storage which is all we are doing.

BlobTranscript allows you to simply use the included Send-Transcript command anywhere in your scripts to upload the log file to your designated storage.

To test this prior I personally used a Windows VM in Azure but feel free to just use your local machine.

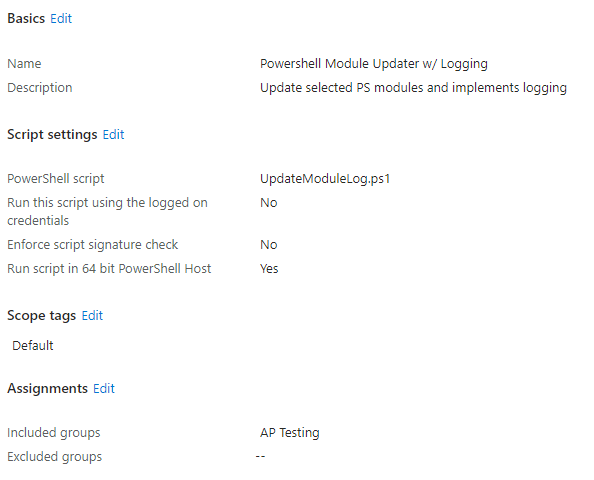

Upload a new PowerShell script within Intune

I’ve assigned to a testing group ‘AP Testing’ I created in Azure AD that contains the lab VM username.

Once assigned, to quickly force the client to pull down the new script, restart the “Microsoft Intune Management Extension” service.

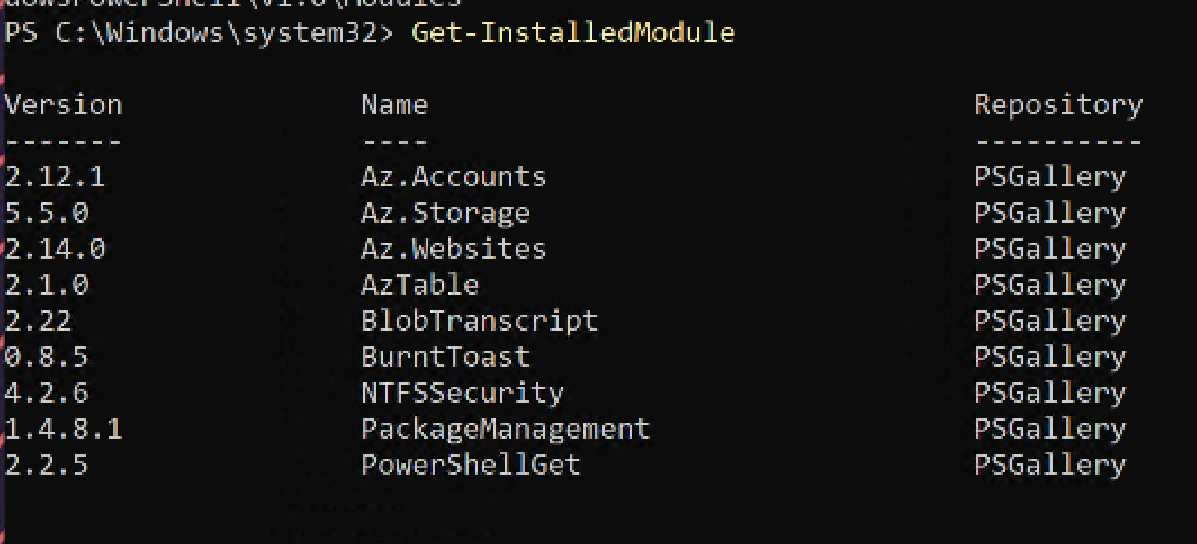

From checking, you can see the modules were installed/updated on the client machine:

Result of Get-InstalledModule

Intune reports that the script was successfully deployed.

Set up blob storage in Azure

The chosen storage medium for this was Blob storage as I’m going to assume you have access to this since you are utilising Microsoft Intune on your estate.

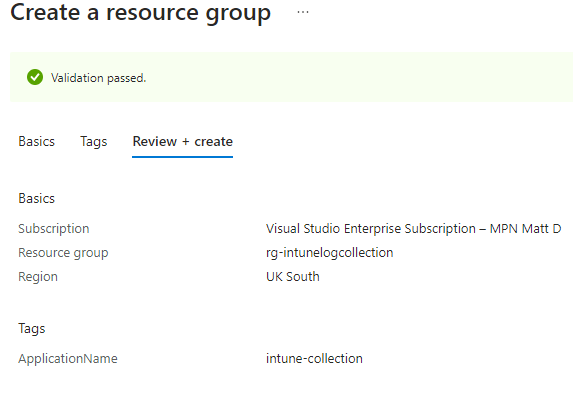

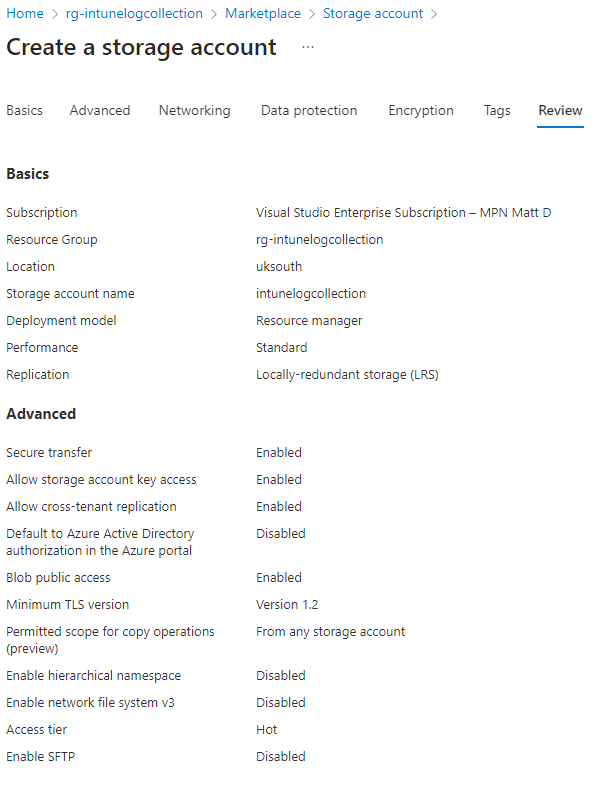

Create a new Resource Group and Storage Account

Create a new resource group in Azure with default settings and choose a name. I’m using my Azure monthly credits in this example.

Create a new storage account within the new resource group. Keep all settings default aside Replication, set this to Locally-redundant storage (LRS).

We don’t require any kind of data redundancy on these files as they just for diagnostics and testing.

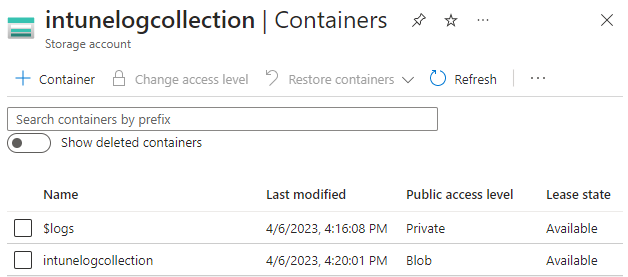

- Once the storage has deployed, in the left column scroll down to 📁Containers

- Click ➕ Container

- Name: intunelogcollection

- Public access level: Blob

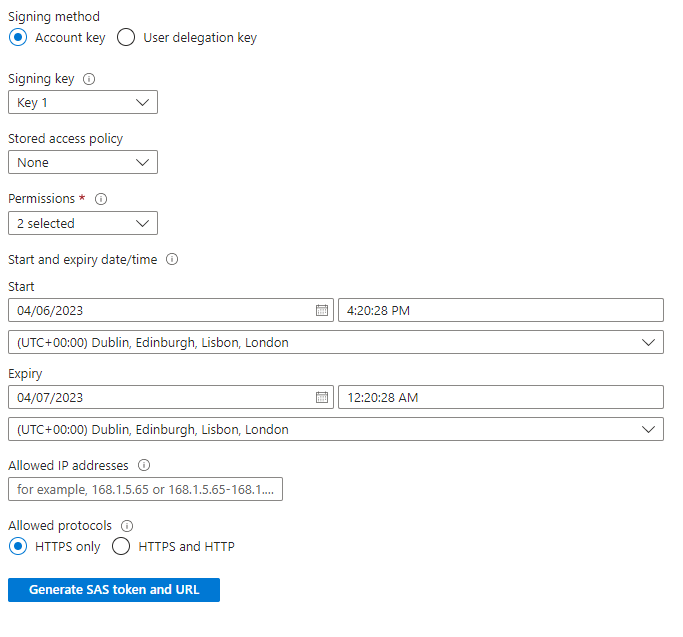

- Once the blob has been created, on the left blade head into ♾️Shared access tokens

- Set the follow values:

- Permissions: Read, Write

- Click Generate SAS token and URL

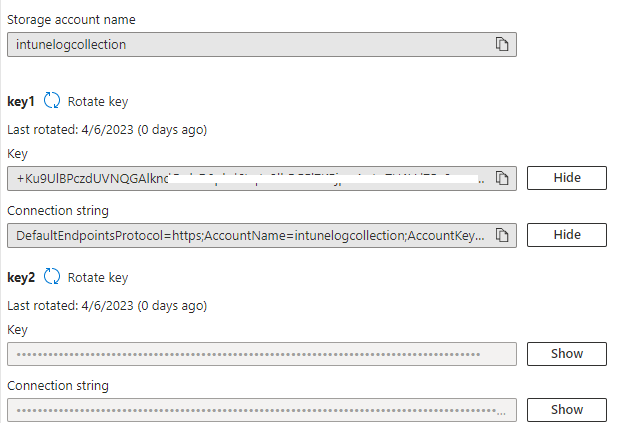

- Go back to the Storage account window previous and click 🔑Access keys on the left hand blade

- Copy the value from key1 below:

Implement transcript upload in your scripts

Create or amend one of your powershell scripts that you want to deploy via Intune and add these variables to the top.

These will be passed into the functions:

Start-Transcript- Starts logging Powershell output to file. Ensure to use verbose in your scripts to enhance the level of loggingSend-Transcript- Uploads the log file to your blob storage location

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

$computerName = hostname

$userName = (Get-ChildItem Env:\USERNAME).Value # Grab current username

$date = (get-date).ToString("yyyy-MM-dd-HH-mm-ss")

$logPath = "C:\Intune\logs" # Where to store logs locally

$transcriptFile = "$logPath\$computerName-$userName-$date.transcript"

$scriptName = $MyInvocation.MyCommand.Name # Get current script name

# BlobTranscript Variables

$storageAccountName = "intunelogcollection" # Storage account name

$storageAccountKey = "**INSERT YOUR STORAGE KEY**" # Access key

$containerName = "intunelogcollection" # Blob name

$blobName = "$computerName-$userName/$scriptName-$date" # Format the log file name

Start-Transcript -Path $transcriptFile # Start logging

Import-Module BlobTranscript # Imports the blob storage module

💡 Make sure you use the correct values for the storage account variables.

Paste the key1 value from the previous Access Key dialog box for the variable $storageAccountKey.

At the end of your script place the following code:

1

2

Stop-Transcript # Stops transcript logging

Send-Transcript -transcript $transcriptFile -storageAccountName $storageAccountName -storageAccountKey $storageAccountKey -containerName $containerName -blobName $blobName # Upload the log file to blob storage

This will stop the logging inside Powershell and call the module BlobTranscript and the function Send-Transcript for uploading the log file to the blob storage.

A new folder and naming convention will be created for that particular device using the following format:

$computerName-$userName/$scriptName-$date.log

DEV-2512-JoeBloggs/Script1-2023-04-07.log

Testing locally

In the below example I am using the module updater script from earlier but implementing transcript logging and uploading:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

#region Initial Variables

$computerName = hostname

$userName = (Get-ChildItem Env:\USERNAME).Value # Grab current username

$date = (get-date).ToString("yyyy-MM-dd-HH-mm-ss")

$logPath = "C:\Intune\logs" # Where to store logs locally

$transcriptFile = "$logPath\$computerName-$userName-$date.transcript"

$scriptName = $MyInvocation.MyCommand.Name # Get script name

# BlobTranscript Variables

$storageAccountName = "intunelogcollection" # Storage account name

$storageAccountKey = "**INSERT YOUR STORAGE KEY**" # Access key

$containerName = "intunelogcollection" # Blob name

$blobName = "$computerName-$userName/$scriptName-$date" # Format the log file name

#endregion

Start-Transcript -Path $transcriptFile # Start logging

Import-Module BlobTranscript # Imports the blob storage module

#region Main Script Body - Update Modules

# PS Modules to install/update - List your modules here:

$modules = @(

'PowershellGet'

'BlobTranscript'

'Az.Storage'

)

function Update-Modules([string[]]$modules) {

#Loop through each module in the list

foreach ($module in $modules) {

# Check if the module exists

if (Get-Module -Name $module -ListAvailable) {

# Get the version of the currently installed module

$installedVersionV = (Get-Module -ListAvailable $module) | Sort-Object Version -Descending | Select-Object Version -First 1

# Convert version to string

$stringver = $installedVersionV | Select-Object @{n = 'Version'; e = { $_.Version -as [string] } }

$installedVersionS = $stringver | Select-Object Version -ExpandProperty Version

Write-Host "Current version $module[$installedVersionS]"

$installedVersionString = $installedVersionS.ToString()

# Get the version of the latest available module from gallery

$latestVersion = (Find-Module -Name $module).Version

# Compare the version numbers

if ($installedVersionString -lt $latestVersion) {

# Update the module

Write-Host "Found latest version $module [$latestVersion], updating.."

# Attempt to update module via Update-Module

try {

Update-Module -Name $module -Force -ErrorAction Stop -Scope AllUsers

Write-Host "Updated $module to [$latestVersion]"

}

# Catch error and force install newer module

catch {

Write-Host $_

Write-Host "Force installing newer module"

Install-Module -Name $module -Force -Scope AllUsers

Write-Host "Updated $module to [$latestVersion]"

}

}

else {

# Already latest installed

Write-Host "Latest version already installed"

}

}

else {

# Install the module if it doesn't exist

Write-Host "Module not found, installing $module[$latestVersion].."

Install-Module -Name $module -Repository PSGallery -Force -AllowClobber -Scope AllUsers

}

}

}

Update-Modules($modules)

#endregion

Stop-Transcript # Stops transcript logging

Send-Transcript -transcript $transcriptFile -storageAccountName $storageAccountName -storageAccountKey $storageAccountKey -containerName $containerName -blobName $blobName # Upload the log file to blob storage

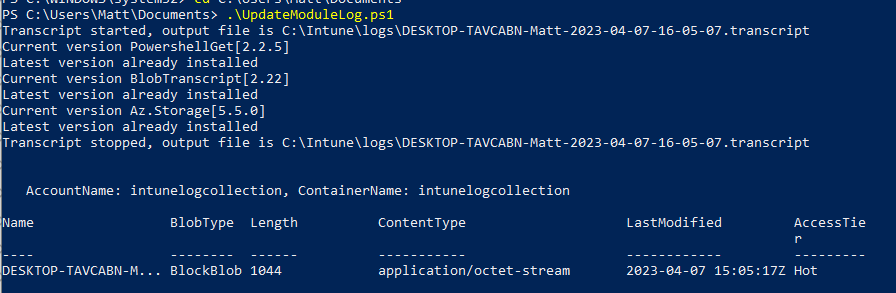

Running this locally on my own machine I get the following:

As you can see, the script calls the Blob storage with the provided parameters and uploads the completed transcript.

Testing via Intune

Assign this to a testing group in Intune with the same settings as before:

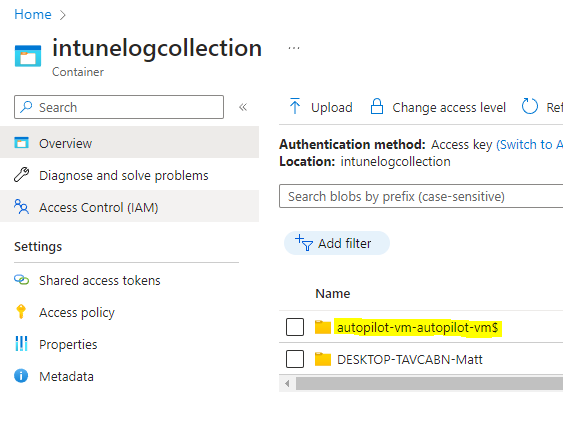

Trigger a check in with Intune on your testing device and double check that you see the folder and log file uploading to your storage.

The transcript log is saved locally on the target machine:

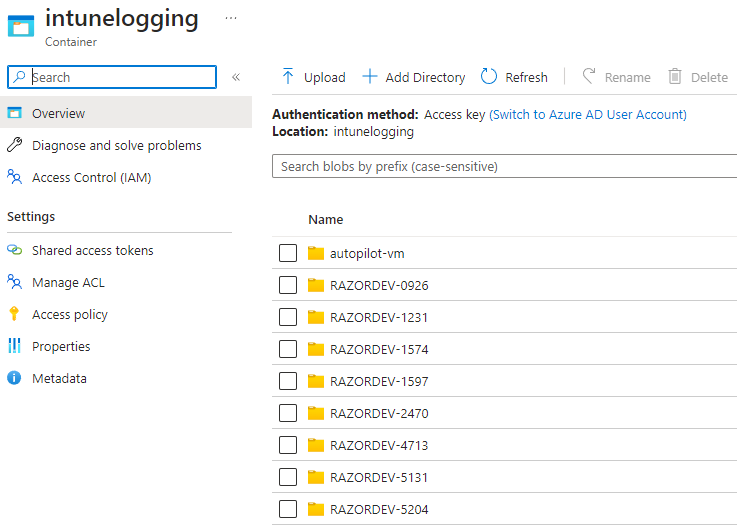

Voila! The folder is created and log file is present in our blob storage container:

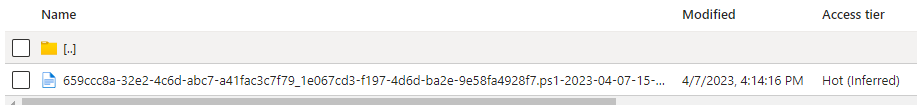

Unfortunately when scripts are deployed via Intune they lose their intial script name as seen below..

Download and save your log file and open with notepad or any text editor, I can see anything that I wrote to host during script execution or any verbose commands.

This could be adapted to suite your individual needs and include error handling and much more in-depth information!

I hope this was a useful post and I’m sure people can adapt this to their needs. I found this especially useful when testing script/apps deployments across remote devices where it might be difficult to fault find errors when all you see in the portal is a pass or fail based on return codes from your scripts.

In the future I am going to try evolve this into a more useful and easily deployable solution similar to Windows Update for Business Reports in Azure.. keep tuned.

https://learn.microsoft.com/en-us/windows/deployment/update/wufb-reports-overview

Other suggestions for improvements could include:

- Inclusion of the Microsoft Intune logs or package installer logs such as winget and chocolatey for app deployment

- Other storage locations such as PowerBI, Teams, Slack Bots etc…

- Utilise Azure Key Vault

I’m honestly surprised Microsoft haven’t given us more tools and reporting potential for Endpoint Management. It’s a good job the community is doing what they can to fill these gaps!

Please feel free to critique, suggest improvements or post any errors or issues you are encountering and I will do my best to help resolve.

Happy Intuning! 🤓